When you look closely at a photo mosaic, each piece of it holds a tiny, square photograph. As you step away from the mosaic, the images blend together to create one large image. The same concept applies to DMDs. If you look closely at a DMD, you would see tiny square mirrors that reflect light, instead of the tiny photographs. From far away (or when the light is projected on the screen), you would see a picture.

The number of mirrors corresponds to the resolution of the screen. DLP 1080p technology delivers more than 2 million pixels for true 1920x1080p resolution, the highest available.

In addition to the mirrors, the DMD unit includes:

A CMOS DDR SRAM chip, which is a memory cell that will electrostatically cause the mirror to tilt to the on or off position, depending on its logic value (0 or 1) A heat sink An optical window, which allows light to pass through while protecting the mirrors from dust and debris DLP ProjectionBefore any of the mirrors switch to their on or off positions, the chip will rapidly decode a bit-streamed image code that enters through the semiconductor. It then converts the data from interlaced to progressive, allowing the picture to fade in. Next, the chip sizes the picture to fit the screen and makes any necessary adjustments to the picture, including brightness, sharpness and color quality. Finally, it relays all the information to the mirrors, completing the whole process in just 16 microseconds.

The mirrors are mounted on tiny hinges that enable them to tilt either toward the light source (ON) or away from it (OFF) up to +/- 12°, and as often as 5,000 times per second. When a mirror is switched on more than off, it creates a light gray pixel. Conversely, if a mirror is off more than on, the pixel will be a dark gray.

The light they reflect is directed through a lens and onto the screen, creating an image. The mirrors can reflect pixels in up to 1,024 shades of gray to convert the video or graphic signal entering the DLP into a highly detailed grayscale image. DLPs also produce the deepest black levels of any projection technology using mirrors always in the off position.

To add color to that image, the white light from the lamp passes through a transparent, spinning color wheel, and onto the DLP chip. The color wheel, synchronized with the chip, filters the light into red, green and blue. The on and off states of each mirror are coordinated with these three basic building blocks of color. A single chip DLP projection system can create 16.7 million colors. Each pixel of light on the screen is red, green or blue at any given moment. The DLP technology relies on the viewer’s eyes to blend the pixels into the desired colors of the image. For example, a mirror responsible for creating a purple pixel will only reflect the red and blue light to the surface. The pixel itself is a rapidly, alternating flash of the blue and red light. Our eyes will blend these flashes in order to see the intended hue of the projected image.

A DLP Cinema projection system has three chips, each with its own color wheel that is capable of producing no fewer than 35 trillion colors. In a 3-chip system, the white light generated from the lamp passes through a prism that divides it into red, green and blue. Each chip is dedicated to one of these three colors. The colored light that the mirrors reflect is then combined and passes through the projection lens to form an image.

Texas Instruments has created a new system called BrilliantColor, which uses system level enhancements to give truer, more vibrant colors. Customers can expand the color scope beyond red, green and blue to add yellow, white, magenta and cyan, for greater color accuracy.

Once the DMD has created a picture out of the light and the color wheel has added color, the light passes through a lens. The lens projects the image onto the screen.

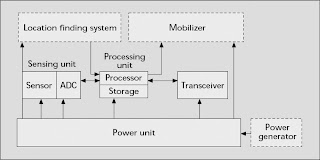

may be supported by power scavenging units such as solar cells. There are also other subunits that are application dependent. Most of the sensor network routing techniques and sensing tasks require knowledge of location with high accuracy. Thus, it is common that a sensor node has a location finding system. A mobilizer may sometimes be needed to move sensor nodes when it is required to carry out their assigned tasks. All of these subunits may need to fit into a matchbox-sized module or even smaller. In the past, sensors are connected by wire lines. Today, this environment is combined with the novel ad hoc networking and wireless technologies to facilitate intersensor communication, which greatly improves the flexibility of installing and configuring a sensor network. Sensor nodes coordinate among themselves to produce high-quality information about the physical environment. A base station (the sink) may be a fixed node or a mobile node capable of connecting the sensor network to an existing communications infrastructure or to the Internet where a user can have access to the reported data. Networking unattended sensor nodes may have profound effects on the efficiency of many military and civil applications such as target field imaging, intrusion detection, weather monitoring, security, tactical surveillance, and distributed computing; on detecting ambient conditions such as temperature, movement, sound, and light; or the presence of certain objects, inventory control, and disaster management. Deployment of a sensor network in these applications can be in random fashion (e.g., dropped from an airplane) or can be planted manually (e.g., fire alarm sensors in a facility). For example, in a disaster management application, a large number of sensors can be dropped from a helicopter. These networked sensors can assist rescue operations by locating survivors, identifying risky areas, and making the rescue team more aware of the overall situation in the disaster area, such as tsunami, earthquake, etc. Sensor nodes are ensely deployed in close proximity or embedded within the medium to be observed. Therefore, they usually work unattended in remote geographic areas. They may be working in the interior of large machinery, at the bottom of an ocean continuously, in a biologically or chemically contaminated field, in a battlefield beyond enemy lines, and in a home or large building. Driven by all these exciting and demanding applications of wireless sensor network, several critical requirements have been addressed actively from the network prospective for supporting viable deployments, as follows:

may be supported by power scavenging units such as solar cells. There are also other subunits that are application dependent. Most of the sensor network routing techniques and sensing tasks require knowledge of location with high accuracy. Thus, it is common that a sensor node has a location finding system. A mobilizer may sometimes be needed to move sensor nodes when it is required to carry out their assigned tasks. All of these subunits may need to fit into a matchbox-sized module or even smaller. In the past, sensors are connected by wire lines. Today, this environment is combined with the novel ad hoc networking and wireless technologies to facilitate intersensor communication, which greatly improves the flexibility of installing and configuring a sensor network. Sensor nodes coordinate among themselves to produce high-quality information about the physical environment. A base station (the sink) may be a fixed node or a mobile node capable of connecting the sensor network to an existing communications infrastructure or to the Internet where a user can have access to the reported data. Networking unattended sensor nodes may have profound effects on the efficiency of many military and civil applications such as target field imaging, intrusion detection, weather monitoring, security, tactical surveillance, and distributed computing; on detecting ambient conditions such as temperature, movement, sound, and light; or the presence of certain objects, inventory control, and disaster management. Deployment of a sensor network in these applications can be in random fashion (e.g., dropped from an airplane) or can be planted manually (e.g., fire alarm sensors in a facility). For example, in a disaster management application, a large number of sensors can be dropped from a helicopter. These networked sensors can assist rescue operations by locating survivors, identifying risky areas, and making the rescue team more aware of the overall situation in the disaster area, such as tsunami, earthquake, etc. Sensor nodes are ensely deployed in close proximity or embedded within the medium to be observed. Therefore, they usually work unattended in remote geographic areas. They may be working in the interior of large machinery, at the bottom of an ocean continuously, in a biologically or chemically contaminated field, in a battlefield beyond enemy lines, and in a home or large building. Driven by all these exciting and demanding applications of wireless sensor network, several critical requirements have been addressed actively from the network prospective for supporting viable deployments, as follows: